Last Updated on February 26, 2026

Table of Contents

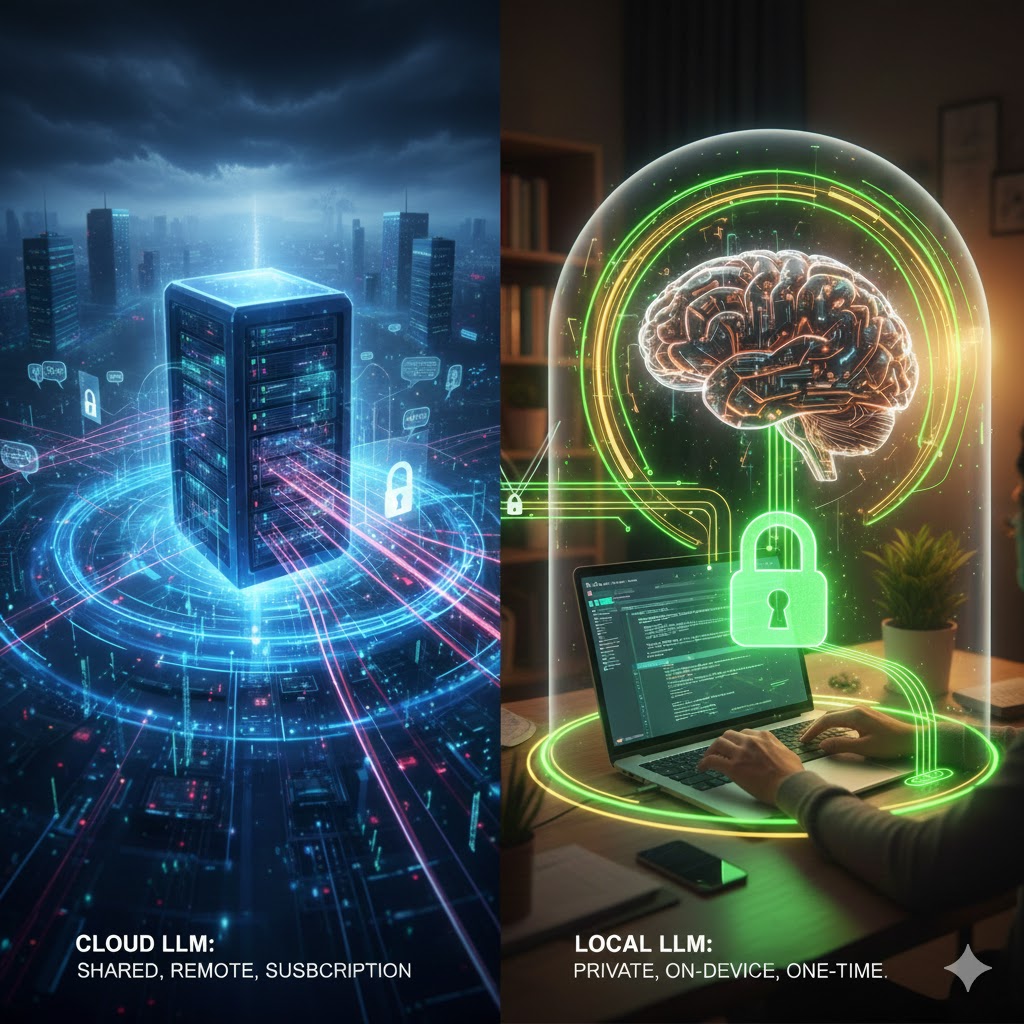

The landscape of document productivity has shifted. While cloud-based assistants were the first to arrive, the demand for privacy has made Local LLMs for Microsoft Word the best standard for legal, medical, and corporate professionals.

Using Local LLMs for Microsoft Word allows you to process sensitive data without an internet connection, effectively eliminating the “Cloud Tax” and security risks associated with third-party servers. By utilizing the GPTLocalhost architecture and the Microsoft Office Add-in specification, you can now run world-class LLMs directly on your own hardware.

This guide tests the popular Local LLMs for Microsoft Word, providing the technical data you need to choose the right private AI for your specific workflow.

🛠️ The Local AI Ecosystem: From Setup to Strategy

To get the most out of AI-assisted writing in Microsoft Word, you need the right foundation. We have organized our knowledge base into core pillars designed to help you build your own secure, AI-powered drafting environment:

- Local AI Infrastructure Guide: – A curated collection of setups for running local AI on your own hardware. This guide details various host configurations and secure API connections, empowering you to build a custom, offline environment that keeps your professional drafts 100% private.

- Local LLM Benchmarks for Microsoft Word (This Page): A curated collection of performance data and empirical tests for top private models. This guide shows local LLMs by their effectiveness in professional tasks—such as contract review, text rewriting, and summarization—helping you select the suitable model for your specific tasks.

- Private AI Writing Workflows: Put your local setup into action with a growing collection of real-world use cases powered by the GPTLocalhost. Learn to manage your workflow with features for offline bulk rewriting, secure translation, and automated formatting—all while keeping your drafts strictly on your own hardware.

- Hybrid AI Strategy for Word: Maximize performance while minimizing costs by combining local models with pay-as-you-go cloud LLMs. This guide demonstrates how to use the “best of both worlds”—leveraging local privacy for sensitive drafting and cloud power for complex tasks—using LLM Proxy and smart local redaction techniques to ensure your most confidential data never leaves your machine.

- For answers to common technical questions, visit our FAQ section or reach out to our team at support@gptlocalhost.com. We are here to help you scale your infrastructure and redefine your professional writing workflow.

👉 Empirical Benchmarks: First-Hand Tested Local LLMs for Microsoft Word

1. Top Models for Text Rewriting

When selecting Local LLMs for Microsoft Word for text transformation, the priority is maintaining the original document’s structural integrity while ensuring the new tone is consistent with your professional goals. The models we have selected for this section are based on our daily production use and the results from our internal benchmarks.

- Microsoft Phi-4: This series of models are small language models (SLMs) that excel at text-based tasks, including text rewriting. The models are known for their strong performance, efficiency, and ability to handle complex tasks, making them suitable for applications like summarization, writing assistance, and rephrasing.

- gpt-oss-20b: The GPT-OSS-20B model, an open-weight model released by OpenAI, can be used effectively for text rewriting. It is designed for general text generation and reasoning tasks, making it suitable for this purpose, especially when fine-tuned for specific style or tone requirements.

2. Top Models for Summarization

When selecting models for summarization, you must ensure the model has a large enough context window to ingest your entire document without losing track of early details. The following recommendations are based on our own daily use and the test results documented in our blog posts.

- Gemma-3: Gemma 3 models are well-suited for a variety of text generation and image understanding tasks, including summarization. They feature a large 128K-token context window, enabling them to process and condense substantial amounts of information, such as multi-page articles or large documents.

- Mistral-Small: Mistral Small is a highly effective and efficient model for text summarization, specifically designed for low-latency applications and handling long documents. It is considered one of the top models in its class for this task, often outperforming larger or competitor models in detailed summaries.

3. Top Models for Contract Review

When selecting models for contract review, it is essential to use a model that respects the rigid structure of legal documents, accurately identifying obligations and risks while maintaining the strict privacy required for sensitive agreements. Notably, among all the models we tested, IBM Granite is the only model that explicitly addresses contract analysis as a core use case in its official documentation.

- IBM Granite: This recipe provides an automated solution for contract analysis leveraging Granite and Docling. It simplifies the process of extracting, analyzing, and understanding key details from contract documents, enabling users to: identify important clauses and terms, assess potential risks and obligations, generate actionable insights for better decision-making.

4. Top Models for Math Reasoning

When selecting models for mathematical reasoning, it is vital to choose a model that supports advanced “Chain-of-Thought” (CoT) processing to verify each step of a calculation. A reliable math-focused model must maintain high logical density to handle complex formulas. We are impressed by the performance of the models highlighted below, which have exceeded our expectations in our blog posts.

- DeepSeek-R1: DeepSeek-R1 is a powerful reasoning AI known for strong performance in math, coding, and logical inference, achieving results comparable to top models like OpenAI’s O1, thanks to its innovative training using Reinforcement Learning (RL) and Group Relative Policy Optimization (GRPO), which allows it to discover and refine reasoning strategies through self-play and rewarding correct conclusions, making it excellent for detailed, step-by-step problem-solving.

- Phi-4-mini-reasoning: This is a 3.8B parameter model from Microsoft, purpose-built for high-density mathematical and logical reasoning. Despite its compact size, it leverages a 128K context window and fine-tuning on synthetic math data to handle formal proofs and multi-step word problems. It is highly optimized for resource-constrained environments like edge devices, balancing GPT-level reasoning with exceptional efficiency.

5. Additional Tested Models & Demo Videos

We evaluated other local LLMs as proofs of concept to verify their feasibility and performance. Each entry below represents a successful integration test using GPTLocalhost for running private AI within Microsoft Word.

- Using Apple Intelligence on macOS. Watch Demo →

- Using DeepSeek-R1-0528 or Phi-4 for Math Reasoning. Watch Demo →

- Using Skywork-OR1 for Math Reasoning. Watch Demo →

- Using Gemma 3 (27B) for Summarization. Watch Demo →

- Using QwQ-32B to compare 9.9 and 9.11. Watch Demo →

- Using DeepSeek-R1 to calculate IQ distributions. Watch Demo →

- Using Phi-4 for Text Completion. Watch Demo →

- Using GLM-4-32B-0414 or Gemma-3-27B-IT-QAT for Creative Writing. Watch Demo →

6. How to Setup Your Local LLMs for Microsoft Word

Getting started with private AI is simple with the GPTLocalhost.

- Download GPTLocalhost: Start for free to test local LLMs on your hardware with no credit card required. For full feature access, choose a flexible, low-cost monthly subscription or secure a one-time license to run the Word Add-in locally forever with no recurring fees.

- Choose Your Model: Select any of the local LLMs mentioned above, or explore more by testing new models to find the perfect match for your unique case.

- Deploy Locally: Run the model using your preferred LLM backend (LM Studio, Ollama, AnythingLLM, etc.).

- Draft with Peace of Mind: Enjoy 100% private AI in Microsoft Word without the worry of data leaks.

Integrating Local LLMs for Microsoft Word is the ultimate upgrade for the privacy-conscious professional. Whether you rely on the technical precision of Phi-4 for text rewriting or the expansive context of Gemma for summarization, the power to choose—and explore more—is now entirely in your hands.

7. Frequently Asked Questions (FAQ)

Is it safe to use Local LLMs for Microsoft Word with sensitive data?

Yes. Unlike cloud-based AI, using Local LLMs for Microsoft Word via GPTLocalhost ensures that your text never leaves your machine. Since the model runs on your local machine, there is no risk of your data being used for training or being exposed in a third-party server breach.

Do I need a high-end GPU to run Local LLMs for Microsoft Word?

Not necessarily, but hardware dictates your speed. For the best experience, a dedicated NVIDIA GPU or a modern NPU (Neural Processing Unit) is recommended. While higher-end hardware (like an RTX 4090 or 5090) provides the near-instant performance needed for heavy drafting, many smaller models are now optimized to run surprisingly well on “average” grade consumer GPUs.

Can I use GPTLocalhost on macOS to run local LLMs in Microsoft Word?

Yes, you can. GPTLocalhost is fully compatible with macOS, allowing you to run powerful LLMs like Phi-4 or gpt-oss models directly on your Mac. If you are using a Mac with Apple Silicon (M-series chips), you will experience exceptional performance because the it is optimized to leverage the Unified Memory and Neural Engine of Apple’s hardware.

How do Local LLMs for Microsoft Word compare to Microsoft Copilot?

The primary advantage of Local LLMs for Microsoft Word is privacy and cost. While Copilot requires a monthly subscription and an internet connection, local models are free to use after setup and work entirely offline. Furthermore, you have the freedom to switch between different LLMs depending on whether you need creative writing or logical reasoning.

Can I use Local LLMs for Microsoft Word on an Intranet or for a team?

Yes. Using LocPilot for Word, organizations can host a single powerful model on a local server and allow team members to connect via their own Word Add-ins. This maintains a “Private Cloud” environment where all intellectual property remains within the company’s internal network.