Last Updated on February 21, 2026

The Free-Tier API: A Genuine “Free Lunch”

In today’s hyper-competitive AI landscape, industry leaders like Mistral, Google, OpenAI, and Anthropic are battling for dominance by offering powerful free-tier APIs that strategic users can treat as a legitimate “free lunch.” By implementing a hybrid strategy that utilizes an LLM proxy paired with local redaction, you can bypass the traditional “privacy tax” and offload data security to your own machine. This approach allows you to channel the full capabilities of the Mistral API directly into Microsoft Word, resulting in a high-performance, zero-cost workflow that keeps your sensitive data strictly under your own control.

📖 Part of the Hybrid AI Strategy Guide: This post is a deep-dive cluster page within our Hybrid AI Integration series—your definitive roadmap for bridging high-performance cloud intelligence with total local data control.

The Privacy Catch

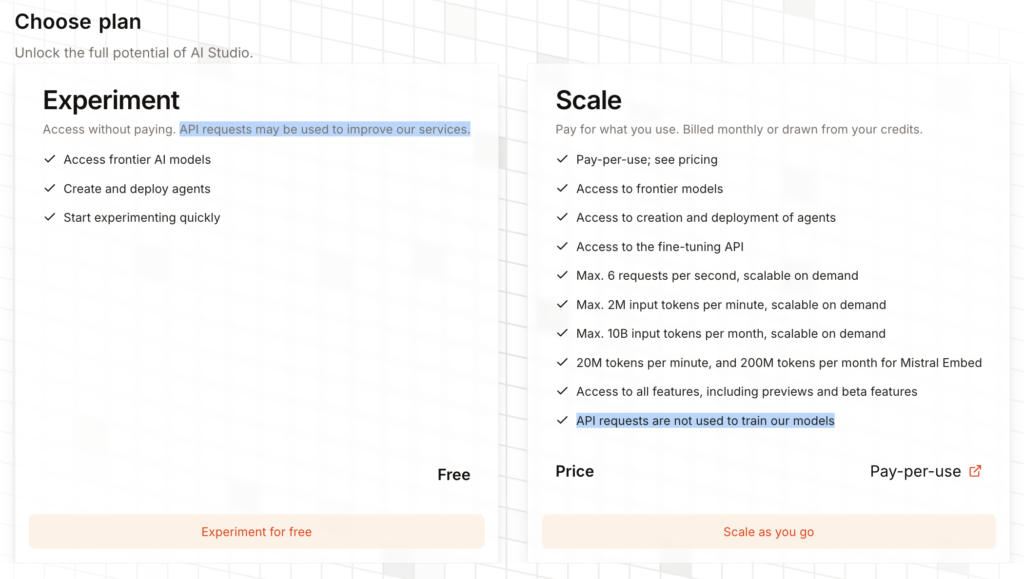

According to the Mistral’s subscription plan, as shown below, the free tier offers frontier models. However, there is a significant trade-off: data used in the free tier may be used by Mistral to improve their products.

The Solution: Bridging the Gap with Local Redaction

Unlike Microsoft Copilot—which requires a $20/month subscription and restricts you to a pre-selected lineup of models—a hybrid workflow powered by GPTLocalhost offers a more strategic path. While Copilot now supports a range of models from OpenAI and Anthropic, you are still bound by Microsoft’s specific terms, privacy defaults, and monthly fees.

By combining the free Mistral API with a local redaction engine, you bypass the “subscription tax” entirely. This setup allows you to access elite cloud AI for $0 while ensuring your sensitive drafts never leave your hardware in a readable state. You get the high-tier intelligence of the cloud with the absolute sovereignty of a local setup.

To harness this “free lunch” without exposing proprietary data, the hybrid strategy utilizes a three-step redaction workflow:

- Redact Locally: Before your draft leaves your machine, sensitive names, and proprietary details are replaced with placeholders (e.g.,

[Person_A]or[Place_X]) within your local environment. - Process via LLM Proxy: The sanitized text is sent to Mistral through an LLM proxy, where you configure your own API key with your preferred LLM provider. GPTLocalhost connects only to the proxy, not directly to the model. As a result, GPTLocalhost does not know whether the LLM is running locally or in the cloud, and your API key is never exposed to the add-in. This setup lets you switch seamlessly between different cloud providers or local models without modifying your core Word configuration.

- Restore Locally: After the cloud AI returns the refined response, you can trigger the “unredact” prompt to restore your original sensitive data directly within Microsoft Word. This ensures the final text is completed locally, without the cloud service ever accessing the true, unredacted version of your content.

Key Comparison: Copilot vs. GPTLocalhost Hybrid

Choosing between native integration and a hybrid strategy is a trade-off between convenience and control. While Microsoft Copilot offers a “one-click” experience, it locks you into a paid subscription and limited ecosystem. In contrast, the GPTLocalhost Hybrid approach treats AI as an interchangeable utility; by using an LLM proxy, you can leverage the Mistral free API for Word at zero cost with a “privacy-first” posture no cloud-only provider can match.

| Feature | Microsoft Copilot | GPTLocalhost Hybrid |

| Monthly Cost | Paid Subscription | $0 (Free Tier) |

| Mistral Integration | ❌ No | ✅ Yes |

| Claude Integration | ✅ Yes | ✅ Yes |

| ChatGPT Integration | ✅ Yes | ✅ Yes |

| Integration with More LLMs | ❌ No | ✅ Yes |

| Privacy Control | Cloud-only processing | Local Redaction + Proxy |

Hybrid in Action: The Best of Both Worlds

The Hybrid AI Strategy optimizes your workflow by treating cloud and local models as interchangeable utilities routed based on privacy, cost, and complexity. By using an LLM proxy as a central controller, you turn Microsoft Word into a powerhouse no longer limited by a single provider’s subscription or data policy, providing you with three key advantages:

- Zero-Cost Power: Leverage the “free lunch” of the Mistral API for complex reasoning and long-context analysis without the subscription fee.

- Total Data Ownership: By redacting data locally before it hits the proxy, you use the cloud as a “blind” processing engine. The cloud handles the logic, but your sensitive secrets never leave your hardware.

- Future-Proof Flexibility: Unlike the rigid walls of Copilot, you can swap cloud and local models easily, ensuring you always have the best tool for the specific task at hand.

Take full control of your hybrid AI integration today. Start building a secure, professional-grade drafting environment—no subscriptions, no data leaks, and no compromises.

For Intranet and Teamwork: Explore LocPilot for Word to bring private, local AI to your entire organization. Learn More 👉

…